The Internet of Things (IoT) is a family of technologies enabling connectivity, sensing, inference, and action [1, 2] that is expected to comprise 30 billion devices by 2020 [3]. IoT’s meteoric growth presents opportunities for large-scale data collection and actuation. However, this growth also amplifies vulnerabilities in critical infrastructure networks and systems [4]. Civilian and military leadership remain insufficiently informed of information security risks and threats, degrading security through network effects [5, 6]. This article considers the vulnerability trajectory of internet-enabled IoT devices and presents the concept of an artificially intelligent Cognitive Protection System (CPS) as a solution capable of securing critical infrastructure against emerging threats.

Challenges Posed by the Internet of Things

Scaling Problems

The recent proliferation of IoT devices and services has heightened the risk of unauthorized system control and data leakage [7]. Commensurate improvements in system dependability and resilience have not materialized alongside this growth [6, 8]. The defensible perimeters of a given system grow with increases in its interactivity, with a single unprotected element constituting a launchpad for attacks on connected devices [4, 9]. These vulnerabilities exist at the intersections of cyber, physical, and human elements—areas critical to the value that IoT offers, and easily

probed through standard network interfaces [10]. For example, in 2014, the non-profit Open Web Application Security Project surveyed IoT perimeters at large and identified 19 IoT attack surfaces and 130 vulnerability types [8]. Modes of attack such as the use of rogue applications, unauthorized communications, improper patching, and poor encryption [2] mirror the challenges of information technology (IT) more generally, while others specifically leverage IoT’s physicality. Device transference in IoT systems poses new attack vectors [11], and actuators may cause physical damage [7, 9]. These problems are only worsened by the rapid design and commercial manufacturing cycle, and the presence of enduring, hard-coded vulnerabilities that may outlast the lifespan of a device’s original manufacturer or vendor [7, 9].

IoT attacks seek to cause information leakage, denial of service, or physical harm [2]. Exploits of IoT vulnerabilities address hardware, software, people, or processes, and their severity can be amplified by configuration problems or Trojan horses [1]. In some cases, analytics comprise an attack [12] and in all cases, the characteristics of an attack evolve over time [13]. The financial cost of information insecurity is significant, with the average corporate data breach in 2017 costing $3.62 million [5], and taking a median of 200 days to detect in 2014 [10]. In a military context, system complexity is higher (e.g., Department of Defense [DoD] “green” buildings have tens of thousands of potentially vulnerable sensors [12]), techniques are more sophisticated, and potential consequences are more severe.

Hyperconnectivity has also eroded the security provided by airgaps. Remote management unlocks opportunities for unauthorized users to jump across networks and commandeer programmable logic controllers, placing infrastructure at risk due to weak supervisory control and data acquisition (SCADA) systems [5, 14].

The Unintended Consequences of Connectivity

Because the IoT provides such a large attack surface, it can serve as a force multiplier for malicious actors. Commodity devices have already disrupted critical infrastructure (i.e., the DynDNS distributed denial-of-service [DDoS] attack, in which “Mirai” malware exploited under-protected consumer webcams) [7, 15–17] and silently collected unauthorized information (via VPNFilter router malware) [18]. Hospitals and cities have been locked down by ransomware [18–20]. And cyberattacks are increasing in frequency and complexity [10], posing a growing threat to mission readiness [13].

Just as a personal fitness application inadvertently shared the locations of warfighters in 2018 [7, 21] decision-makers invite unanticipated consequences in the interest of short-term goals—for example, placing hackable voice service microphones in cars or cameras at road intersections [22]. The same “digital breadcrumbs” that make IoT useful for intelligence data collection and analysis [23, 24] can also leak intelligence- related data; one potentially common example of this is the risk of smart televisions serving as a conduit for intercepting two-factor authentication audio [2]. A warfighter using a WiFi-controlled power outlet may leak network credentials, allowing the plain-text interception of encrypted data. Or, multiple outlets could be cycled to trigger a harmonic, causing an electrical power substation to enter a safety shutoff [25]. The true cost of inexpensive commodity IoT devices is high, and the risks are difficult to anticipate. For example, IT personnel may not consider a scenario in which routers are corrupted to track the location of a deployed unit; and thus may not defend against such a potentiality [26]. Defense contractors may not know (or forget) that an attacker could lower their smart thermostat’s temperature, causing internal water lines to burst in the winter. Installing a smart lock not only means that a key can be copied, but also that digital access can be subverted [7].

Proposed Solutions

Conventional cyber-physical security systems rarely consider other devices as potential indirect entry points [6]. A critical first step is to be aware of all the capabilities of a new technology—whether or not their use is envisioned—and to explain them to those potentially impacted. In the context of IoT and critical infrastructure, better education, thoughtful design, and vigilance will improve outcomes [16]. The National Institute of Standards and Technology, the Center for Internet Security, and IEEE have all issued IoT security guidelines, while the Federal Trade Commission encourages engineered-in protection. However, these guidelines lack the means for effective enforcement [7]. The DoD suggests conducting a risk analysis and implementing IoT only where necessary, while ensuring that devices use end-to-end encryption, monitoring network traffic, and using equipment from trusted vendors with managed supply chains [12]. This goal is complicated by the fact that risk assessment is convoluted with the cascading effects of connectivity [13].

Although DoD guidance documents further suggest how to purchase, set up, use, and decommission devices [2], civilian IoT networks are capable of undermining internal security efforts (e.g., consumer routers denying service to DoD critical network infrastructure, or vice-versa [12]). The Department of Homeland Security and the White House have suggested that government and industry must collaborate to ensure that security advances leave the internet open, interoperable, secure, and reliable, while protecting critical networks and computing infrastructure [18, 27]. Outside these frameworks, questions remain. How can we be trusted to get IoT security right, when individual users struggle to keep passwords safe and software patched? If information security education does not seem effective, how can practitioners mitigate misconfiguration risks? And, what if computation within durable infrastructure is incapable of running next-generation algorithms?

These questions highlight a problem central to IoT security: the need to confront resource constraints. For example, sensor redundancy improves data trustworthiness— but only at the expense of increased energy, computation, and financial costs [11]. Context-aware systems, discussed below, provide one approach to implementing resource-conscious, adaptive IoT security.

Cognitive Protection Systems

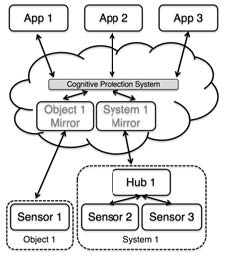

An emerging connectivity architecture implements “Data Proxies” to digitally duplicate the physical world on remote endpoints, using sensor data to build state-space system representations. Unlike digital twins, which typically use the richest-available information to mirror an object, Data Proxies intelligently schedule sensor sampling to meet application demands while minimizing cost. Physical and statistical models rooted in control theory expand these sparse data. Proxies may therefore indirectly measure the state of unobservable variables, and do so using a fraction of the resources required for traditional twinning [28–30]. For example, in developing a usage-based insurance application, the Proxy reduced mobile device power and bandwidth consumption by a factor of 20. This architecture is illustrated in Figure 1.

In this architecture, devices only interact directly with their own digital avatar residing in the Cloud, Fog, or another unconstrained computing node. Avatars then interact within the unconstrained computing environment, enabling a system of digital guardians using scalable computation to monitor and moderate interactions [17].

Figure 1. Objects and systems are mirrored in remote computation, where scalable resources allow the CPS to simulate commands and identify anomalies.

By limiting direct device communications to a single endpoint, the number of exposed channels in need of protection is reduced, while mirroring devices on unconstrained hardware creates a pathway to support computationally-intensive improvements. Another advantage of device mirrors interacting with one another (rather than the devices themselves) is that data can be uploaded once and multiply used. This reduces redundant sampling to conserve resources such as battery life and network bandwidth [28–30].

This device-to-device airgap is similar to the way a young child interacts with the world: delegating uncertain actions through their guardian. With Data Proxies, one or more “precocious” devices communicate through a secure channel with a digital guardian possessing broader world-views, richer perception, and enhanced cognitive powers ideally suited to protecting dependents. This direct-to-Cloud approach has a further advantage in the context of IoT: device replacement is simplified, as only a single interaction must be assumed (the new device assumes the old device’s mirror, rather than requiring each device and service interaction to be separately configured). This improves system flexibility and adaptability, creating an interoperable and dynamic glue layer resistant to fragmentation.

It is this resource-conserving, guardian- mirrored architecture that forms the basis for an IoT-centric security architecture known as a cyber-physical system (CPS). CPSs address three types of targeted and unintentional threats:

- data threats, in which information (flow) compromises a protected system

- control threats, wherein malicious or invalid commands result in a dangerous outcome

- system threats, such as mechanical faults

Their performance requires trustworthy IoT hardware and proper installation, configuration, and maintenance procedures. This is a challenge, as devices can be subverted in the supply chain, in manufacturing, at time of installation, and during use [12].

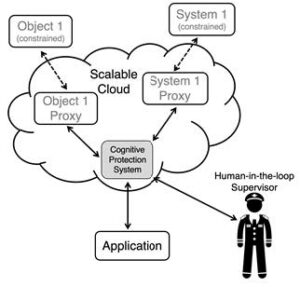

CPSs are built upon the Data Proxy architecture’s underlying system models, and the knowledge that the flow of information is restricted to between avatars. The interaction of device mirrors using the CPS as an intermediate gateway is depicted in Figure 2. Data Proxies work in conjunction with known and learned rule definitions, artificial intelligence, and simulation, to enable the CPSs constituent elements of “Cognitive Firewalls” (CF) and “Cognitive Supervisors” (CS).

Figure 2. Object avatars and applications interact with one another through the CPS. There are no device-to-device connections, reducing exposed communications.

In the event the CPS has low confidence in a critical decision, external verification may be requested.

Traditional firewalls monitor network traffic to identify possible information leakage, which is necessary but not sufficient to protect against the physical attacks possible with the IoT, such as maliciously issuing commands that cause equipment to exceed safe control limits. Part of a CPS, a CF is a self-learning system capable of evaluating commands for safety in context, allowing only safe commands to pass from the Cloud to the end device.

CFs use scalable computation, context information, system models, and observations to test commands before execution. When commands are sent to connected devices, they are simulated in digital environments run within the Cloud or Fog. If the results are undesirable, the command is blocked; if the results are questionable, the command is sent up a hierarchical chain to seek authorization [28–30]. Safe commands pass to the end device unaltered, with delay dependent upon network conditions and available computational power (for instance, a model robotic arm within a factory tested and relayed or rejected commands within 20 milliseconds). The use of artificial intelligence (AI) allows the system’s policies to adapt to unpredictable attacks, acting autonomously rather than upon rigid rules. Applying context brings reasoning to the system to help address unexpected scenarios. For example, a CF might learn the safe temperature range for the occupants within an environment and reject commands that cause an HVAC system to exceed these limits.

One variation on the CF protects against localized attacks. The CF may be run partially offline; for example, within a powerful gateway device connected to sensing and actuation modules within an aircraft, or operated within a processor’s secure enclave to monitor dependent virtual machines. These implementations display lower latency, at the cost of less-scalable computation, but they serve a valuable purpose in responding to threats or connectivity lapses in real time. An offline CF could have prevented the physical effects of the Stuxnet worm by identifying the impact of malicious commands targeted at SCADA systems by testing commands associated with the worm’s rootkit, and blocking commands intended to cause catastrophic damage to centrifuges.

Local CFs can also respond to mission-critical faults before sensor data arrives at a remote server for analytics and decision making. A hybrid local/remote approach can mirror the human body’s unconscious impulse response. For example, when touching a hot stove, the body retracts the arm autonomously, before the pain signal reaches the brainstem. Afterwards, the brain processes the injury and devises rules to avoid a repeat.

Note that a CF can monitor states that are not obviously physical in nature. For example, a command requesting a high frequency temperature reading (when the measured environment has a long time constant) could be identified as a problematic request. Over-sampling might result in poor quality data, or the saturation of onboard computation, or the depletion of a device’s battery—an extreme form of a denial-of-service attack. A CF could simulate the impact of data requests on resource consumption, compute the likelihood of a locked-up processor or a dead battery, and reject the request or automatically reduce temperature measurement to a safe rate.

The same models behind resource-efficient mirroring and the CF enable the creation of CSs, artificially intelligent systems capable of identifying and responding to anomalies directly, or notifying a human when things start to feel wrong [28–30]. CSs apply context information and rules, learning the relationship between system inputs and outputs to raise alerts when performance does not match expectations. For example, a CS could learn the relationship among on-base water flow meters and autonomously identify a water leak or siphoning event. The approach also works in a digital context, and typical network traffic patterns can be learned—so that a base’s targeting by a DDoS attack can be detected and mitigated.

Consider a real-world military example for the CPS: for over a decade, the DoD has increased its use of high-tech power distribution solutions to improve delivery efficiency. A remote installation might have its own microgrid, with programmable logic controllers managing diesel generators, transformers, or battery banks. Electronic infrastructure can be allowed online [31] to provide for remote monitoring in order for officers to estimate resource demands and schedule appropriate resupply, or else to disconnect non-critical circuits, prolonging operation when resupply is not imminent.

Putting the grid online offers insight and controllability benefits, but exposes critical infrastructure to exploitation. By implementing semi-local computation (an on-site Fog) to run a CPS, and learning context through physics-based models augmented with additional data (e.g., daily schedules, planned operations, number of individuals on-site, weather forecast), a microgrid could monitor itself and the CS could identify anomalies that suggest that energy is being consumed unexpectedly, or that a connected device poses a fire hazard [31].

The CF could possibly identify a malicious actor’s attempt to run the generator overspeed to cause an equipment-damaging power surge or early fuel depletion. In both cases, the CF could reject commands that would otherwise interrupt attached critical services and notify personnel of the attempted attack. The CPS is capable of serving as a critical tool in enabling secure and efficient connectivity for DoD operations, and improving system resilience in the face of emerging threats to the IoT.

Security-forward Thinking

Not all attacks are avoidable. The ability to recover from or regenerate performance after an unexpected event is important for securing IoT’s place in the management of critical infrastructure. Cyber-resilience constitutes a bridge between sustaining operations of a system, while ensuring mission execution and retaining critical function throughout an attack [13].

Specifically, resilient systems must plan for, absorb, recover from, and adapt to known and unknown threats through hardening, diversification, adaptability, and thoughtful degradation. Systems can propagate control in order to minimize cascading domino effects [13]. Resilience is developed and engineered at the micro-, meso-, or macro-scale, assuring the performance of individual components or interfaces, architectural or system properties, or entire missions, respectively [32].

Linkov & Kott [13] suggest the use of active agents—both human and artificial—to absorb, recover, and adapt to attacks. The proposed CPS system discussed above, implemented properly, embodies this approach to self-healing systems. The most resilient systems both prevent attacks and prepare for unavoidable attacks. They continue operating while defending themselves; constrain or minimize an attack’s reach; and adapt to avoid future occurrences [32]. The DoD has identified smart, self-healing systems as an area for future development [33]. Here, too, adaptive

AI could be applied to detect, respond to, and operate in the face of an ongoing attack. Using a CPS for heightened situational awareness will enable rapid response and attack mitigation, thereby improving outcomes. With broader connectivity, the models learned at one military installation can be readily deployed to others, developing digital herd immunity.

Conclusion

We have seen that pervasive connectivity and the expansion of the IoT pose opportunities and challenges in developing and sustaining the operation of critical infrastructure. DoD has the potential to take a leadership position in the IoT, working toward common goals of protecting, defending, and securing information infrastructure, digital networks, and remote actuators [5]. To build a safer future, education and awareness are critical; IoT practitioners must regularly conduct security surveys to identify all possible risks and approaches to remediation [2]. This includes the easily-foreseeable entry points as well as the evolving frontier of connectivity.

Finally, we explored the use of AI to develop a context-aware CPS making use of learned system models to test commands for safety in a simulated environment to execution. These same enabling technologies can build increased system resilience in the face of internal and external threats. Developers must make efforts to develop “smart, smart things” with embedded security [24], long lifecycles with computational headroom and provisions for upgradability, and support products for their entire lifecycles.

Practitioners and users need to carefully consider the unintended consequences of using a connected system. They should also remain cognizant of the fact that while it is necessary to follow best available security practices, those, too, can be undermined by a complex web of interactions.

References

1. Tedeschi, S., Mehnen, J, Tapolou, N., & Roy, R. (2017). Secure IoT devices for the maintenance of machine tools. Procedia CIRP, 59, 150–155. doi:10.1016/j.procir.2016.10.002

2. U.S. Government Accountability Office. (2017, July 27). Internet of Things: Enhanced assessments and guidance are needed to address security risks in DOD (GAO-17-668). Retrieved from https://www.gao.gov/assets/690/686203.pdf

3. Nordrum, A. (2016, Augus 18). Popular Internet of Things forecast of 50 billion devices by 2020 is outdated. IEEE Spectrum. Retrieved from https://spectrum.ieee.org/tech-talk/telecom/internet/popular-internetof-things-forecast-of-50-billion-devices-by-2020-is-outdated

4. Klinedinst, D. J., Land, J., & O’Meara, K. (2017, October). 2017 merging Technology Domains Risk Survey. Pittsburgh, PA: Carnegie Mellon University Software Engineering Institute. Retrieved from https://resources. sei.cmu.edu/library/asset-view.cfm?assetid=505311

5. Bardwell, A., Buggy, S., & Walls, R. (2017, December). Cybersecurity Education for Military Officers (MBA Professional Report). Monterey, CA: Naval Postgraduate School. Retrieved from https://calhoun.nps.edu/bitstream/handle/10945/56856/17Dec_Bardwell_Buggy_Walls.pdf?sequence=1&isAllowed=y

6. McDermott, T., Horowitz, B., Nadolski, M., Meierhofer, P., Bezzo, N., Davidson, J., & Williams, R. (2017, September 29). Human Capital Development – Resilient Cyber Physical Systems (SERC-2017-TR-113). Hoboken, NJ: Stevens Institute of Technology Systems Engineering Research Center. Retrieved from http://www.dtic.mil/dtic/tr/fulltext/u2/1040186.pdf

7. U.S. Government Accountability Office. (2017, May). Technology assessment: Internet of Things: Status and implications of an increasingly connected world (GAO-17-75). Retrieved from https://www.gao.gov/assets/690/684590.pdf

8. Morin, M. E. (2016, September). Protecting Networks Via Automated Defense Of Cyber Systems (Master’s thesis). Monterey, CA: Naval Postgraduate School. Retrieved from https://www.hsdl.org/?view&did=796527

9. Higgenbotham, S. (2018, June 20). Six ways IoT security is terrible. IEEE Spectrum. Retrieved from https://spectrum.ieee.org/telecom/security/6-reasons-why-iot-security-is-terrible

10. Zach, D., Elhabashy, A. E., Wells, L. J., & Camelio, J. A. (2016). Cyber-physical vulnerability assessment in manufacturing systems. Procedia Manufacturing, 5, 1060–1074. doi:10.1016/j.promfg.2016.08.075

11. Cañedo, J., Hancock, A., & Skjellum, A. (2017, Fall). Trust management for cyber- physical systems. Homeland Defense & Security Information Analysis Center Journal, 4(3), 15–18. Retrieved from https://www.hdiac.org/wp-content/uploads/2018/04/Trust_Management_for_Cyber_Physical_Systems_Volume_4_Issue_3-1.pdf

12. U. S. Department of Defense. (2016, December), DoD policy recommendations for the Internet of Things (IoT). DoD Chief Information Officer. Retrieved from https://www.hsdl.org/?abstract&did=799676

13. Linkov, I., & Kott, A. (2019). Fundamental concepts of cyber resilience: Introduction and overview. In Kott & Linkov (Eds.), Cyber Resilience of Systems and Networks (pp. 1–25). Basel, CHE: Springer International Publishing AG.

14. Glenn, C., Sterbentz, D., & Wright, A. (2016, December 20). Cyber Threat and Vulnerability Analysis of the U.S. Electric Sector. Idaho Falls, ID: Idaho National Laboratory.doi:10.2172/1337873

15. Perlroth, N. (2016, October 21). Hackers used new weapons to disrupt major websites across U.S. The New York Times, A1. Retrieved from https://www.nytimes.com/2016/10/22/business/internet-problems-attack.html

16. Sarma, S., & Siegel, J. (2016, November 30).Bad (Internet of) Things. ComputerWorld. Retrieved from https://www.computerworld.com/article/3146128/internet-of-things/bad-internet-of-things.html.

17. Lucero II, L (2018, May 27). F.B.I.’s urgent request: Reboot your router to stop russia-linked malware. The New York Times, B4. Retrieved from https://www.nytimes.com/2018/05/27/technology/router-fbi-reboot-malware.html

18. U.S. Department of Homeland Security. (2018, May 15). U.S. Department of Homeland Security Cybersecurity Strategy. Retrieved from https://www.dhs.gov/sites/default/files/publications/DHS-Cybersecurity-Strategy_1.pdf

19. Osborne, C. (2018, January 17). US hospital pays $55,000 to hackers after ransomware attack. ZDNet. Retrieved from https://www.zdnet.com/article/us-hospital-pays-55000-to-ransomware-operators/

20. Newman, L. H. (2018, April 23). Atlanta spent $2.6M to recover from a $52,000 ransomware scare. Wired. Retrieved from https://www.wired.com/story/atlanta-spent-26m-recover-

from-ransomware-scare/

21. Chappell, B. (2018, January 29). Pentagon reviews GPS policies after soldiers’ Strava tracks are seemingly exposed NPR. Retrieved from https://www.npr.org/sections/thetwo-way/2018/01/29/581597949/pentagon-reviews-gps-data-after-soldiers-strava-tracks-are-seemingly-exposed

22. U. S. Government Accountability Office. (2016, September). Data and analytics innovation: Emerging opportunities and challenges (GAO-16-659SP). Retrieved from https://www.gao.gov/assets/680/679903.pdf

23. Montalbano, E. (2018, January 30). The US Military’s IoT problem is much bigger than fitness trackers. The SecurITy Ledger. Retrieved from https://securityledger.com/2018/01/security-personnel-challenges-stymie-dods-adoption-iot/

24. Johnson, B. D., Vanatta, N., Draudt, A., & West, J. R. (2017, September 13). The New Dogs of War: The Future of Weaponized Artificial Intelligence. U.S. Army Cyber Institute at West Point and Arizona State University’s Threatcasting Lab. Retrieved from http://www.dtic.mil/docs/citations/AD1040008

25. Greenberg, A. (2017, September 6). Hackers gain direct access to US power grid controls. Wired. Retrieved from https://www.wired.com/story/hackers-gain-switchflipping-access-to-us-power-systems/

26. Adib, F., & Katabi, D. (2013, August). See through walls with Wi-Fi! Paper presented at the SIGCOMM’13 conference, Hong Kong, China. Retrieved from https://people.csail.mit.edu/fadel/papers/wivi-paper.pdf

27. The White House. (2018, September). National Cyber Strategy of the United States of America. Retrieved from https://www.whitehouse.gov/wp-content/uploads/2018/09/National-Cyber-Strategy.pdf

28. Siegel, J. E., Kumar, S., & Sarma, S. E. (2018, August). The future Internet of Things: secure, efficient, and model-based. IEEE Internet of Things Journal, 5(4), 2386–2398. doi:10.1109/JIOT.2017.2755620

29. Siegel, J. E., & Kumar, S. (Forthcoming). Cloud, context, and cognition: Paving the way for efficient and secure IoT implementations. In Ranjan, R. (Ed.), Integration of Cloud Computing, Cyber Physical Systems and Internet of Things. Berlin, DE: Springer- Verlag.

30. Siegel, J. E. (2016, June). Data proxies, the cognitive layer, and application locality: Enablers of cloud-connected vehicles and next-generation Internet of Things (Doctoral dissertation). Cambridge, MA: Massachusetts Institute of Technology. Retrieved from https://dspace.mit.edu/handle/ 1721.1/104456

31. Siegel, J.E. (2018, September). Real-time Deep Neural Networks for internet-enabled arc-fault detection. Engineering Applications of Artificial Intelligence, 74, 35–42.doi:10.1016/j.engappai.2018.05.009

32. Kott, A., Blakely, B., Henshel, D., Wehner, G., Rowell, J., Evans, N., . . . Møller, A. (2018, April). Approaches to Enhancing Cyber Resilience: Report of the North Atlantic Treaty Organization (NATO) Workshop IST-153 (ARL-SR-0396). Adelphi, MD: U.S. Army Research Laboratory. Retrieved from https://arxiv.org/ftp/arxiv/papers/1804/1804.07651.pdf

33. Grain, D. (2014, November 14). Critical Infrastructure Security and Resilience National Research and Development Plan: Final Report and Recommendations. National Infrastructure Advisory Council. Retrieved from https://www.dhs.gov/sites/default/files/publications/NIAC-CISR-RD-Plan-Report-508.pdf