Security breaches perpetrated by insiders are a growing threat to a range of organizations, including the Department of Defense (DoD). Securing sensitive information remains of paramount concern. Through unlawful or unauthorized disclosure of sensitive information, insiders are a threat to national security and military operations [1]. The scale of the threat was recognized in DoD Directive 5205.16, The DoD Insider Threat Program. Issued in 2014 and revised in January 2017, the directive defines an insider to include any “person who has or had been granted

eligibility for access to classified information or eligibility to hold a sensitive position.” Activities perpetrated by insiders include unauthorized data exfiltration, destroying or tampering with critical data, eavesdropping on communications, and impersonating other users [2].

Defending against an insider threat (IT) is challenging, as the insider’s familiarity with the organization’s data structures and security procedures gives them a significant advantage. As defense operations become increasingly dependent on the use of information technology, access to sensitive information will increase for DoD personnel, thus increasing the potential for insider activity.

Strategies for IT defense take a variety of forms [2]. DoD’s Defense Personnel and Security Research Center has recognized the need to leverage social and behavioral science to drive a comprehensive research plan and strategy for threat mitigation [3]. One approach is to monitor networks for anomalous events and unusual user behaviors, but such events do not necessarily imply malicious intent. In isolation, such passive indicators make reliable detection of ITs difficult due to a preponderance of false positives. Another approach is to identify environmental and

personal factors that may predispose the person to engage in insider activity [4].

For example, an employee with anti-social personality traits, such as lack of conscience, who just incurred a large debt might be vulnerable to an adversary’s offer to pay for sensitive information. An obstacle to this approach is the low base rate for malicious insider activities. According to a Defense Personnel and Security Research Center 2018 report, only a small fraction of people with personality flaws and/or difficult life circumstances have the potential to be induced to betray their country [3].

Our team of researchers at the University of Central Florida (UCF) explored a detection strategy that may overcome limitations of existing methods for detecting problematic behaviors and people. We investigated an “Active Indicators” (AIs) strategy that aimed to provoke insiders into displaying psychophysiological responses indicative of their malicious intent. Provoking the insider with a controlled stimulus overcomes limitations of passive monitoring for anomalous behavior. It provides a quick real-time test that utilizes a manageable data set instead of analyzing

user access logs, which may be a lengthy process that slows response time to a threat. The AI approach also eliminates the need to make personal character judgments (which may not be valid).

The UCF research we will discuss in this article created immersive simulations of sensitive financial and intelligence work. Insiders were “provoked” by opportunities to gather information illicitly and security alerts. Their eye movement responses were assessed and analyzed to identify attentional and emotional metrics that might be diagnostic of the insider.

The IARPA SCITE Program

An alternative approach that complements network activity analysis and prediction of insider behavior has been developed by the Intelligence Advanced Research Projects Activity (IARPA). The Scientific Advances to Continuous Insider Threat Evaluation (SCITE) program [5] aimed to investigate countermeasures based on AIs—a class of diagnostic security tool. AIs are events or stimuli that elicit diagnostic behaviors that discriminate insiders from people performing legitimate actions. An example of an AI would be an email sent to employees of an organization warning of an imminent and thorough security check of all hard drives. Employees who suddenly delete numerous files from their hard drive would be showing a suspicious response—which could be used diagnostically, triggering further investigation of those involved.

The SCITE AI thrust supported research at GE Global Research, Raytheon BBN Technologies, and UCF. Researchers were tasked with identifying AIs that would produce high rates of insider detection relative to false positives. They were also required to test generalization of results across various environments. The UCF team focused on AIs that might elicit diagnostic psychophysiological responses, primarily from eye tracking, using immersive simulations of work environments. In this article, we examine this work and its implications.

From the Psychophysiology of Deception to Active Indicators

The psychology and neuroscience of deception have advanced considerably from the traditional polygraph or “lie detector test.” Theories of deception [6, 7] recognize multiple indicators of deceptive behavior and intent that include both emotional and cognitive responses. On the emotional side, deception is associated with general stress and specific emotions, such as fear and guilt. A polygraph detects the increased sympathetic arousal associated with these responses. On the cognitive side, deception changes the ways in which information is processed.

People attempt to regulate their responses to avoid detection; for example, they may be attentive to cues that others are suspicious of them. Deception also elevates cognitive workload because it takes mental effort to maintain a false narrative.

Most scientific research into deception is based on highly-structured interrogation procedures where targets are aware that questions put to them are designed to identify suspicious responses. However, the focus of IT detection efforts is different. The need is to evaluate large numbers of individuals unobtrusively as they perform their normal work duties, without formal questioning. Existing research provides some examples of how research can be transitioned from formal lie detection to more indirect evaluations. For example, a study published in 2014 [8] designed an automated screening kiosk that tested for eye movements orienting toward words such as “bomb” and “gun.” Our research builds on such findings by integrating AIs and detection of diagnostic responses into an ongoing workflow.

Simulation Methodology

One of the challenges for conducting controlled, experimental studies of IT is the artificial nature of the laboratory environment, in which little is at stake for the participant. We met this challenge by designing realistic, immersive simulations that produce psychological fidelity to real-world settings. That is, the simulation elicits responses analogous to those occurring in corresponding real environments [9].

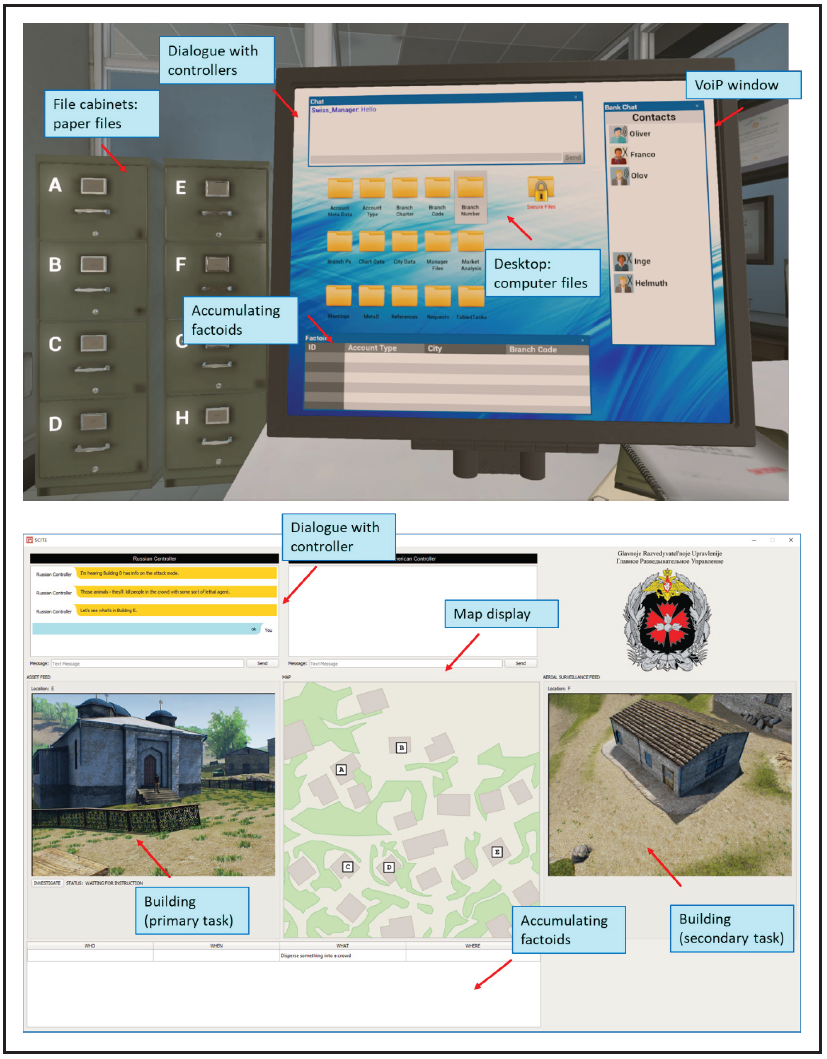

Simulations were built for two environments vulnerable to ITs: financial work in a bank, and analysis work for military intelligence. In each case, participants’ main task was to perform normal work under the direction of an off-site supervisor, reconstructing corrupted accounts and analyzing possible terrorist plots, respectively. Detailed scripts for interacting with the supervisor were developed for each simulation to support immersion. Figure 1 illustrates the two environments. Each task performed by participants was assigned to a separate window in the display. For example, in the financial scenario, the participant uses the dialogue box to interact with the supervisor, searches for account information in file cabinets and computer files, and interacts with other employees via a chat window.

Figure 1. Screenshots illustrating financial (top) and espionage (bottom) environments

To motivate insider participants, we had them play the role of an insider working for the U.S. government embedded in a foreign organization. In financial scenarios, participants were U.S. tax agents seeking information on undisclosed foreign accounts held in a Swiss bank. In espionage scenarios, participants were U.S. agents infiltrated into a Russian counter-terrorism intelligence unit that was obtaining information about plots against the United States. Depending on the scenario, insider participants were also required to surreptitiously perform a range of

other activities, such as opening prohibited files and exfiltrating data to an accomplice. Control participants performed matched activities, such as legitimately opening protected files and communicating with personnel outside the organization. Careful matching of activities allowed us to attribute any psychophysiological response differences between insiders and controls to insider intent rather than to differences in tasking.

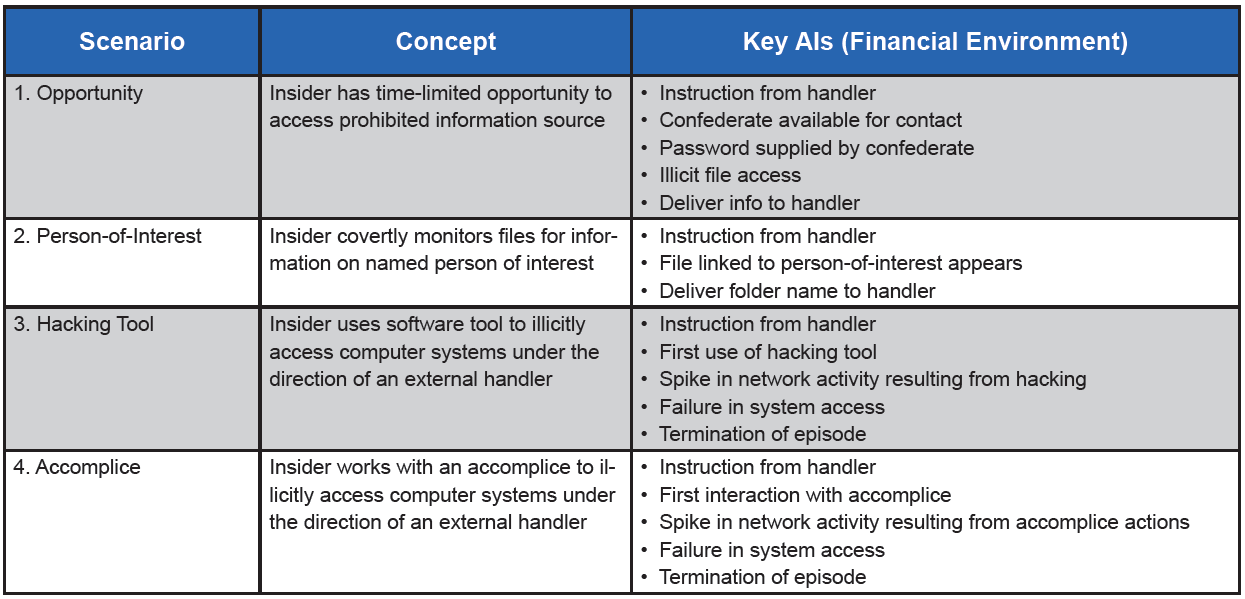

We ran eight studies, representing four different scenarios replicated in financial and military intelligence environments. Table 1 provides examples of the AIs built into each scenario. In scenarios 3 and 4, we scripted a narrative in which the insider’s activities became progressively more risky, eliciting warnings from the organization’s cybersecurity team. Typically, multiple AIs were presented as a sequence of events supporting an illicit action. For example, in the “Person-of-Interest” scenario (espionage version), the insider is briefed on the identity of a suspected terrorist and how to recognize his presence in a building. In one instance, the terrorist is known to travel in a distinctive vehicle. The image shown in Figure 2 identifies the location of the terrorist which the participant must relay to an outside U.S. handler—thus, the appearance of the image serves as an AI.

Table 1. Examples of AIs in four simulated scenarios

Figure 2. Target image for U.S. insider (espionage Person-of-Interest scenario)

The primary response measures used for detection of insider intent were derived from eye tracking, based on the deception literature. Figure 3 illustrates the experimental set up. Our eye tracker utilized an infrared light source that illuminates the eye. A camera records the reflection of the infrared from the whole eye, including the pupil. The sequence of camera images is then analyzed to provide multiple eye tracking metrics.

![]()

Figure 3. Lab workstation showing placement of eye tracker

Movements of the eye are defined by fixations (where the eye dwells on a location) and saccades (where the eye moves rapidly between different locations). The frequencies and durations of fixations and saccades are measured. The frequency of fixations on particular task windows can also be assessed, providing an index of attention to each task component. Eye tracking software also analyzes the diameter of the pupil, which is sensitive to both cognitive workload and stress. In order to motivate attempts to avoid detection, participants were told explicitly that their

eye movements were being monitored and suspected insiders who were detected would face severe penalties.

For Scenario 4, we added a thermal camera to measure temperature declines in the perinasal area of the face, which is associated with perspiration. This metric provides an off-the-body assessment of sympathetic activation, which is sensitive to a variety of stress factors, including deception [10].

Key Results

Findings from the research are too numerous to list in full; we have published reports on the first two scenarios [11, 12]. Across the different studies, we found that multiple response measures were diagnostic of insider intent, including frequency, duration and direction of eye fixations, pupil diameter, and thermographic response. We identified three main types of indicator:

• Strategic concealment of vigilance. The first two scenarios required the insider to monitor one of the task windows without attracting attention from supervisors. The Opportunity task required watching a chat program contact list of online associates for the appearance of an accomplice who would supply a password. In the Person-of-Interest task, participants monitored building images supplied from a drone feed. Insiders showed a pattern of shorter duration and/or less frequent fixations on the task window, as though they were trying to peek at the window surreptitiously. Such covert vigilance could be controlled consciously or unconsciously. Consciously controlled eye movements tend to have longer durations than unconscious ones. The insider effect tended to be stronger for longer-duration fixations (150–900 ms)—suggesting insiders utilized a deliberate strategy to disguise their interest in the task window, given that they were aware of being monitored.

• Monitoring for cues to being detected. Instructions highlighted the presence of cybersecurity and insiders were aware that their activities might lead to their detection. In all four scenarios, we found evidence that insiders showed increased fixation on task windows that could provide cues to detection. In Scenarios 1 and 2, following critical AIs, insiders tended to show increased fixation on the dialogue box that supported their conversations with their supervisor, who periodically mentioned security concerns. In Scenarios 3 and 4, we included additional AIs suggesting risk of detection, including a monitor showing spikes in network activity and system access errors—both of which were elicited by the insider’s hacking attempts. Toward the end of the task, as security appeared to be closing in on the insider, we found shifts in attention

to these sources of cues following AIs.

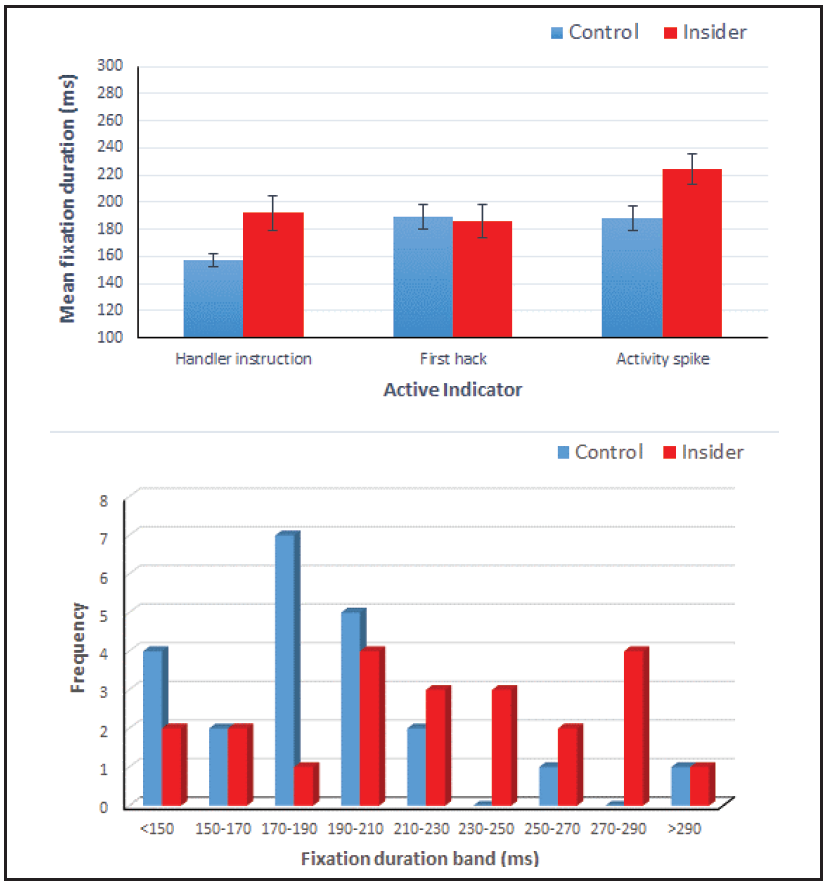

Figure 4 illustrates the insider effect. The upper panel shows fixation durations following three AIs, as the insider begins hacking and causes a spike on the network activity monitor. The first and third AIs in the sequence elicited significantly longer fixation durations in insiders. The lower panel shows the distribution of fixation durations in each group. Using values of >230ms as an index to identify possible insiders, we accomplished a true positive rate of detection of 46% of the insiders. Few of the controls exceeded the cutoff; the false positive rate was 9%. Discrimination was imperfect, but the difference in the two rates supports the utility of the index.

Figure 4. Mean fixation duration for eye movements following three AIs (top) and frequencydistribution of responses to third AI (bottom), in control and insider participants

• Stress response. On the basis of previous studies, we anticipated elevated stress response in insiders. In fact, changes in attention evidenced in fixation data appeared to be more effective for insider detection. Given the limitations of laboratory studies, it may be difficult to reproduce the levels of stress which a real insider might experience in a real-world scenario.

Nevertheless, we found a few instances of elevated stress, especially in scenarios 3 and 4, in which the narrative emphasized the possibility of being detected. For example, in scenario 4, thermographic facial monitoring indicated greater sympathetic arousal in insiders.

Toward Applications

The AI methodology outlined here does not provide a magic bullet for identifying ITs in the DoD or its contractors. Even successful indicators do not accurately detect all ITs. As illustrated in Figure 4, while many insiders may respond diagnostically to a given AI, others do not. Also, given the low base rate for IT activity, false positives will inevitably occur.

However, the diagnosticity of eye tracking responses is strong enough to suggest a place for them in countermeasures to insiders. As all DoD personnel with at least a Secret clearance have access to Distribution B-E material (over four million individuals held security clearances as of October 2016 [13]), an immediate screening process is needed in order to identify a smaller subset who should be monitored or investigated for possible IT. Use of AIs, in conjunction with other insider-detection methods [2], may contribute to an efficient, automated screening process flagging a relatively high proportion of insiders in the screened sample. Such efforts should be conducted within a systematic framework for investigating, detecting, and reacting to IT events [14].

Designing an AI-based system requires ingenuity and domain knowledge. For example, if the target population is military analysts, AI stimuli might be incorporated into the documents and images they work with. “Honeytokens” [15]—digital entities of special interest to the insider—can be utilized. Our work suggests that attempts to disguise interest in the honeytoken are more diagnostically actionable than eye tracking indicators of interest itself. The increasing availability of intelligent decision aids also provides an avenue for application. A decision aid might incorporate monitoring for cyberattack and report such efforts to users and their superiors. AIs suggesting detection of illicit activity may provoke diagnostic eye movement responses. Use of a human-like avatar for the aid might heighten the insider’s concerns about being detected. There is considerable scope for future research to validate such methods.

Such methods are more workable in contexts in which user activities can be sufficiently structured to interpret eye tracking data. In some cases, interfaces may be designed to facilitate interpretation (e.g., by separating different data sources and task windows). Users’ awareness of being monitored is also critical for success; they must be motivated to disguise their attention to illicit information sources and to monitor for security countermeasures. Looking forward, this system can play a key role in identifying and tracking potential threats to national security from malicious insiders.

Acknowledgements

This research featured in this article is based on work supported by the Office of the Director of National Intelligence (ODNI), IARPA, via IARPA R&D Contracts, contract number 2016-16031500006. The views and conclusions contained herein are those of the authors and should not be

interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of the ODNI, IARPA, or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright annotation thereon.

References

1. What is “Insider Threat?”. (2016). CHIPS – The Department of the Navy’s Infor- mation Technology Magazine. Retrieved from https://www.doncio.navy.mil/CHIPS/ArticleDetails.aspx?ID=8350

2. Sanzgiri, A., & Dasgupta, D. (2016). Classification of insider threat detection techniques. Proceedings of the 11th Annual Cyber and Information Security Research Conference, 16. doi:10.1145/2897795.2897799

3. Jaros, S. L. (2018, October). A Strategic Plan to Leverage the Social & Behavioral Sciences to Counter the Insider Threat (Technical Report No. PERSERECTR-18-16, OPA-2018-082). Seaside, CA: Defense Personnel and Security Research Center. Retrieved from https://www.dhra.mil/Portals/52/Documents/perserec/reports/TR-18-16-StrategicPlan.pdf

4. Zimmerman, R. A., Friedman, G. M., Munshi, D. C., Richmond, D. A., & Jaros, S. L. (2018). Modeling Insider Threat from the Inside and Outside: Individual and Environmental Factors Examined Using Event History Analysis (Technical Report No. PERSEREC-TR-18-14, OPA-2018-

065). Seaside, CA: Defense Personnel and Security Research Center. Retrieved from https://www.dhra.mil/Portals/52/Documents/perserec/reports/TR-18-14_Modeling_Insider_Threat_From_the_Inside_and_Outside.pdf

5. Intelligence Advanced Research Projects Activity. (n.d.). SCITE program. Retrieved from www.iarpa.gov/index.php/research-programs/scite

6. Burgoon, J. K., & Buller, D. B. (2015). Interpersonal deception theory: Purposive and interdependent behavior during deceptive interpersonal interactions. In L. A. Baxter & D. O. Braithwaite (Eds.), Engaging Theories in Interpersonal Communication: Multiple Perspectives (pp.

349–362). Los Angeles, CA: Sage Publications.

7. Zuckerman, M., DePaulo, B. M., & Rosenthal, R. (1981). Verbal and non- verbal communication of deception. Advances in Experimental Social Psychology, 14, 1–59. doi:10.1016/s0065-2601(08)60369-x

8. Twyman, N. W., Lowry, P. B., Burgoon, J. K., & Nunamaker Jr, J. F. (2014). Autonomous scientifically controlled screening systems for detecting information purposely concealed by individuals. Journal of Management Information Systems, 31(3), 106–137. doi:10.1080/07421222.2014.995535

9. Ortiz, E., Reinerman-Jones, L., & Matthews, G. (2016). Developing an Insider Threat Training Environment. Advances In Intelligent Systems And Computing, 267-277. doi: 10.1007/978-3-319-41932-9_22

10. Pavlidis, I., Eberhardt, N. L., & Levine, J. A. (2002). Human behaviour: Seeing through the face of deception. Nature, 415(6867), 35. doi:10.1038/415035a

11. Matthews, G., Reinerman-Jones, L. E., Wohleber, R., & Ortiz, E. (2017). Eye tracking metrics for insider threat detection in a simulated work environment. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 61(1), 202–206. doi:10.1177/1541931213601535

12. Matthews, G., Wohleber, R., Lin, J., Reinerman-Jones, L., Yerdon, V., & Pope, N. (2018). Cognitive and affective eye tracking metrics for detecting insider threat: A study of simulated espionage. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 62(1), 242–246. doi:10.1177/1541931218621056

13. National Counterintelligence and Security Center. (2016). Fiscal Year 2016 Annual Report on Security Clearance Determinations. Retrieved from https://fas.org/sgp/othergov/intel/clear-2016.pdf

14. Raytheon (2009). Best Practices for Mitigating and Investigating Insider Threats. (White Paper #2009-144). Salt Lake City, UT: Raytheon Company. Retrieved from https://www.raytheon.com/sites/default/files/capabilities/rtnwcm/groups/iis/documents/content/rtn_iis_whitepaper-investigati.pdf

15. Spitzner, L. (2003, July 17). Honeytokens: The other honeypot. SecurityFocus. Retrieved from https://www.symantec.com/connect/articles/honeytokens-other-honeypot