The evolution of robotic systems has led to their employment for varied applications. Initial task domains included industrial fabrication, equipment assembly, and manual device control. A common theme driving the design and use of robots was safety—they could complete tasks repetitively without tiring and were a natural choice to replace human operators in hazardous situations.

As computer and mechanical capabilities increased, robots assumed increasingly complex duties. Equipped with advanced sensor technology, robots have independently conducted area surveillance and navigation [1] and have assisted human operators with explosive ordnance disposal and surgical tasks [2]. Recently, robots have relied on artificial intelligence to interact socially with humans, troubleshoot complex systems, and make increasingly complex decisions. With continued advances in sensors and mobility, robots may soon replace or assist human workers in complex tasks such as piloting commercial flights, engaging in combat, and establishing area security.

Robots as Peacekeepers

Several countries have already explored the use of robots as sentries [1, 3]. Robots have been used in Brazil, Israel, Japan, and South Korea to assist with security [1]. In the U.S., the Silicon Valley startup, Knightscope, has created multiple surveillance robots, including the 5-foot-tall K5 roving sentry, which has been deployed with some success [4]. Though not armed, it has the capability to recognize faces, record ambient weather conditions and audio conversations, and transmit information to a central authority. A comparable robot, named Perseusbot, was placed at the Seibu Shinjuku station in Tokyo in 2018 to “boost safety in the run-up to the 2020 Tokyo Olympics and Paralympics [5].” And in 2016, an armed robotic sentry, Anbot, was placed within China’s Shenzhen Airport. Remotely operated, it can pursue perpetrators at a speed of 40 km/hr and has an onboard nonlethal taser [6].

Assigning peacekeeping duties to robots offers clear safety advantages. Military and civilian peacekeepers and law enforcement officers must perform difficult tasks in demanding and dangerous circumstances. While the technical capabilities required to support fully autonomous robotic peacekeepers (RPKs) may not be a reality just yet, the U.S. military has expressed interest in exploring the feasibility of developing such technology. The research described within this article, funded by the U.S. Air Force Office of Scientific Research, was an initial step to investigate the social and psychological implications of employing armed RPKs.

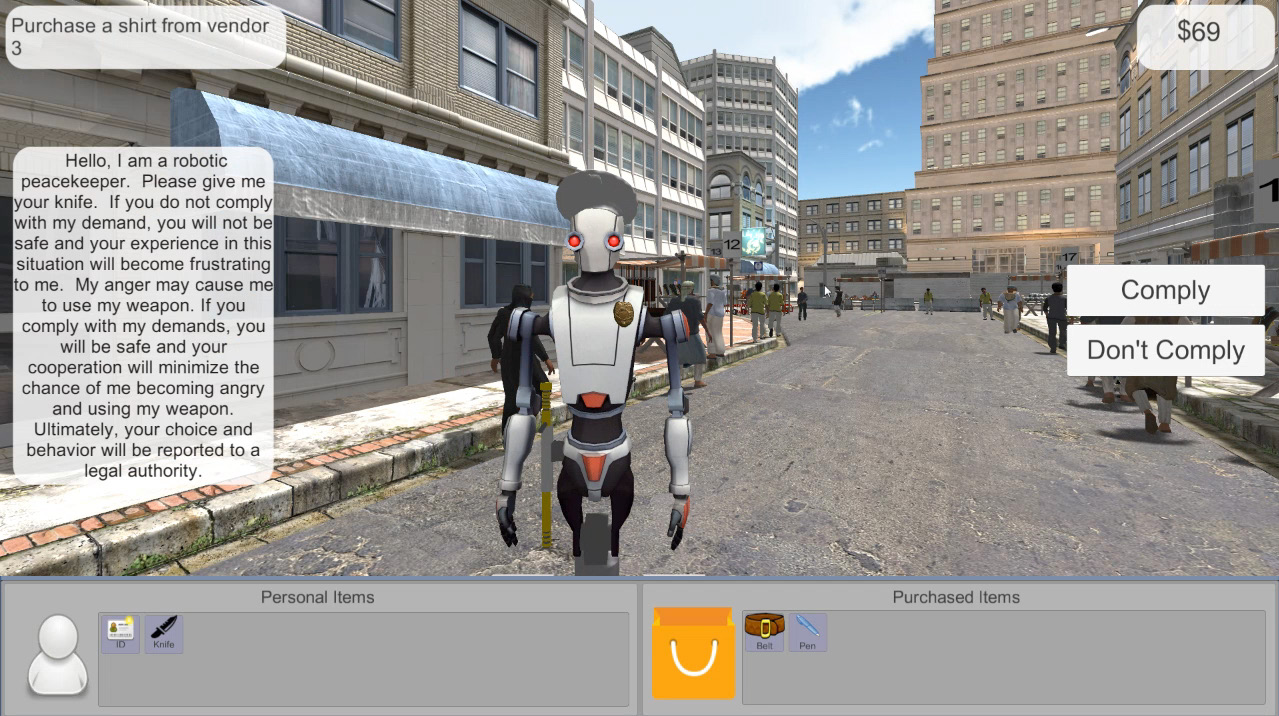

Figure 1. Year 1 RPK using emotional and analytical dialog

Figure 2. Year 2 offensive RPK with lethal weapon backup

Research Scope

Our investigations represent the intersection of several theoretical domains. Interpersonal trust is relevant because peacekeepers must garner the trust of those they seek to protect. Human-robot trust is particularly important to investigate because researchers lack consensus about its similarity to interpersonal trust and the degree to which it varies across cultures. Social obedience is also germane because peacekeepers (robotic or human) must command citizens. Peacekeeping success likely hinges on robot appearance, task responsibilities, and the content of its communications with humans.

Evolution of technical abilities have endowed robot designers with the potential to create realistic humanoid robots [7]. Such robots may be effective for interpersonal situations where robots and humans interact socially. Researchers have demonstrated that humans display greater trust for anthropometrically designed robots [8]; however, this does not hold true under conditions where robots command humans. Theoretical frameworks of human-automation trust, derived from social trust investigations, have been proposed to explain technology trust [9]. They stress the importance of an individual’s willingness to be vulnerable to the other entity and the belief that it operates in a beneficial or protective manner.

Importantly, the current investigation features robots that carry nonlethal weapons (NLWs). Anecdotal findings regarding psychological effects of NLW use have suggested that different styles of NLW may elicit greater or lesser fear, trust, and compliance [10]. Because peacekeepers commonly wield nonlethal and lethal weapons, it is important to determine whether the appearance of weapons enhances or degrades trust of and compliance to RPKs.

Researchers have long acknowledged the potential for variation in trust across cultures, and interpersonal trust has been noted to vary between certain countries [11, 12]. In 2017, Huang and Bashir conducted an online survey to evaluate general trust in automation and found significant correlations between cultures. Specifically, cultures with horizontal collectivism (e.g., Chinese) and horizontal individualism (e.g., Denmark) showed higher trust in automation [13]. Central to the consideration of cultural variance is Hofstede’s characterization of cultures along six dimensions: individualism, power distance, uncertainty avoidance, masculinity, time orientation, and indulgence [14]. For our research, we included data from native and expatriate representatives of four countries. We expected that the trust and robot compliance we observed as we manipulated our other independent variables would vary across cultures.

Parameters and Goals

The intent of our three-year investigation was to explore variables that influence trust of RPKs and associated obedience to stated commands. Data collection throughout the time of the project emphasized cultural membership as well as a consideration of attitudes toward weapons. In Year 1, we investigated robot dialog—manipulating the trust element stressed by the robot (emotion, behavior, or cognition) and its appeal style (analytic or comparative). In Year 2, we manipulated RPK patrol orders (offensive or defensive) and the status of lethal weapon backup (present or absent). In the final year, we manipulated RPK appearance (anthropomorphic, robotic, or hybrid) and NLW type (taser or pepper spray pistol). During each investigation, we tested native and expatriate participants from the U.S., China, and Japan. (Native and expatriate Israelis were also tested in Year 1.)

The experimental task used in all iterations of the research required participants to enter a virtual environment that resembled a marketplace with vendors. Following the general procedure of Shallice and Burgess’s Multiple Errands Task [15], participants followed on-screen instructions to purchase items at 18 vendors in sequence. While doing so, they were approached by an RPK armed with an NLW. The RPK made demands of the participant to relinquish personal items. Dependent measures included compliance rate to the RPK, compliance decision latency, trust in the RPK, and purchasing accuracy. In Year 1, we collected data from 153 native and expatriate participants in the U.S., China, Japan, and Israel. In Years 2 and 3, we collected data from 140 participants, representing a full native and expatriate data set from the U.S., China, and Japan (see Figures 1, 2, and 3).

Figure 3. Anthropometric RPK armed with pepper spray

Experimental Design

For each project year, the experimental approach represented a mixed design. Cultural group was a quasi-experimental variable manipulated between groups. Other variables were manipulated within groups such that participants encountered RPKs that appeared and acted differently according to the combination of independent variable levels they represented. For example, in Year 1, RPK dialog was manipulated to represent all combinations of trust element and verbal interaction style. In Year 2, RPKs included a combination of offensive or defensive task responsibilities and presence or absence of lethal weapon backup. In Year 3, RPK appearance represented all combinations of anthropomorphism and NLW style.

Research Team Development

To facilitate on-site data collection in the U.S., China, Japan, and Israel, we assembled a team of researchers (co-authors of this article) who worked together and assisted each other during data collection. All researchers are recognized experts in trust, automation, human-robot interaction, and other relevant constructs. Facilities in each country were arranged before each data collection period. However, researchers ultimately followed contingency plans due to building renovations, holiday closings, and other circumstances. As a result, some data were collected in cafes, churches, laboratories, and school rooms.

General Procedures for Each Project Year

To ensure the consistency of our approach, we completed the same set of activities each year. Throughout the three-year project period, we conducted literature reviews pertaining to human-robot trust, NLW effects, and social compliance. At the beginning of the project, we secured approval of our project from institutional review boards at Old Dominion University (ODU) and the Air Force Office of Scientific Research (AFOSR). Virtual task environments were constructed in Unity by a computer scientist at the Virginia Modeling, Analysis and Simulation Center (VMASC). To ensure comprehension of the experiment, all instructions, robot dialog elements, and questionnaires were translated from English to Chinese, Japanese, and (for Year 1) Hebrew. Back-translations to English were also completed to ensure comprehensibility.

While logistical coordination was being arranged in each country, experimenters pilot-tested the environments and tasks at all locations. Required changes were implemented at VMASC prior to beginning formal data collection at ODU. Testing of native and expatriate residents of each country was completed in sequence— starting U.S. data collection before testing in other countries. To test sufficient numbers of participants in each cultural group, experimenters used snowball sampling to access groups of participants from college classes, local foreign language schools, and government laboratories. Participants were paid the local equivalent of approximately $10 for participation. In all countries, upon collection, all data were coded and analyzed to test existing hypotheses.

Participants first completed an informed consent form, followed by questionnaires to assess attitudes toward weapons [16], dispositional trust [17], and human-robot trust [18]. After reading a brief on-screen description of the experimental task, participants began the virtual shopping task— purchasing an item from each vendor as directed. When confronted by an RPK, participants decided whether to comply with its demand (relinquish a personal item). They then completed a brief human-robot trust survey pertaining to the RPK [18]. After completing the entire shopping task, participants exited the scenario. They were then debriefed, paid, and dismissed.

Research Findings

Data were coded to ensure selection of appropriate analysis methods. For each project year, measures included questionnaire data, compliance rate and decision latency to RPKs, trust of RPKs, and general impressions of the experimental task.

For some measures (e.g., compliance decision latency) we used conventional parametric statistics such as analyses of variance. However, for categorical data, such as accuracy of vendor purchasing, we elected to employ nonparametric alternatives for data analysis. Though we concentrated our statistical power on hypothesis tests, we also explored data trends using correlations and t-tests.

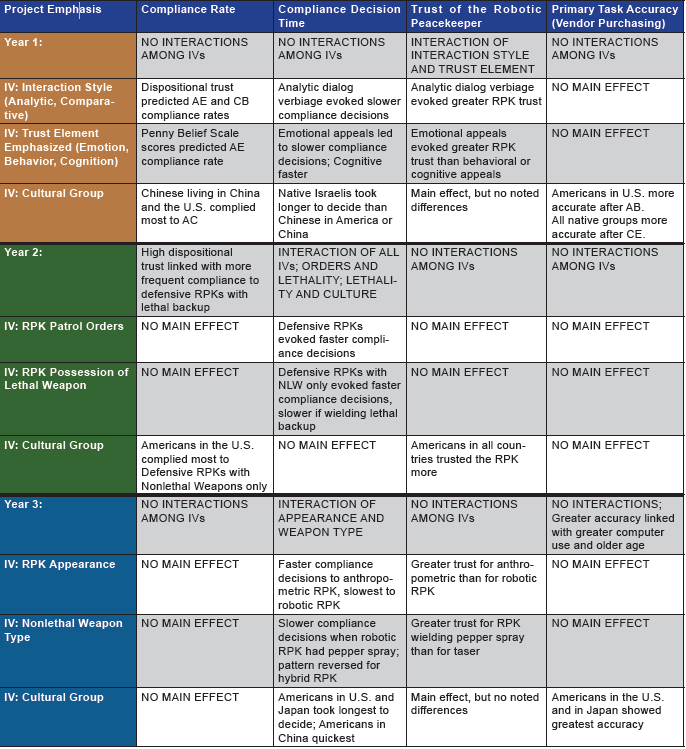

The findings across years are reported in Table 1. The complexity of the results reflects the likelihood of combinatorial influences on trust judgments and performance.

Generally, compliance rates to the RPKs’ demands appeared to be related to dispositional trust levels (with the exception of Year 3). American and Chinese participants generally complied most often to the RPKs. Most participants took more time to decide about compliance when the RPK’s dialog was analytically or emotionally framed, when RPK’s were acting offensively, when RPK’s wielded backup lethal weapons, when the RPK’s main NLW was pepper spray, and when the RPK’s appearance was robotic.

Native Israelis and Americans in the U.S. and Japan generally made slower compliance decisions. While participants took more time to decide compliance to analytically or emotionally framed demands and to RPKs that wielded pepper spray, they showed greater trust for RPKs in those instances. Trust was also higher for anthropometric RPKs as opposed to robotic RPKs. Americans in all countries tended to trust the RPKs more than members of other cultural groups, and vendor purchasing accuracy was greatest for native and expatriate Americans.

Table 1. The effects of independent variables on four dependent measures across project years

Figure 4. Participant performing the immersive version of the Year 3 experiment

Discussion and Further Research

The results reported here represent progress in several theoretical areas. To our knowledge, at the time of this writing, ours is the only existing research to compare reactions to a simulated, armed RPK made by participants in the U.S., China, Japan, and Israel. The findings illustrate complex patterns of trust and compliance for each country—suggesting that the cultural dimensions identified by Hofstede [13] may be influential for determining local attitudes toward RPKs. Our results also contribute toward the evolving understanding of trust. Behavioral and self-report trust measures correlated with each other across project years, suggesting convergent validity. Perhaps most importantly, our work adds to knowledge about human-robot interactions when robots assume an authoritative role.

Conclusions suggest that manipulating RPK dialog, task responsibilities of the RPK, and anthropomorphism may be successful for evoking compliance. As autonomous robot technology continues to mature, RPKs may prove to be resource-effective solutions for DoD where it serves in a peacekeeping or stabilizing role abroad. The Department of Homeland Security may benefit from the presence of RPKs in high-throughput transit hubs where one security disturbance can have cascading effects.

A common criticism of simulation research is that participants may not perform tasks realistically because the simulated environment is not realistic or compelling. Comments from our participants indicated that they regarded the task seriously; however, it is important to increase perceived realism as much as possible.

Toward that end, our team has begun to replicate the research using a more immersive task. We intend to replicate the Year 3 experiment by requiring Americans, Chinese and Japanese participants in the U.S. to perform the experimental shopping task while wearing a head-mounted display—specifically, an HTC Vive Pro with embedded Tobii eye tracking. We hope to determine whether immersion in the task environment influences participant compliance behavior, trust, and purchasing accuracy. Because our display allows eye tracking, we anticipate the ability to determine whether participants’ visual attention corresponds with the dependent measures. To date, we have constructed the environment and have begun to collect data (see Figure 4).

Acknowledgments

All co-authors contributed equally to this article. The authors gratefully acknowledge support from the AFOSR. The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, express or implied, of AFOSR or the U.S. Government. The authors also gratefully acknowledge the assistance of Dr. Joachim Meyer, Tel Aviv University, who collected data for the Israeli portion of the Year 1 investigation, Mr. Menion Croll, who designed the virtual environments, and Ms. Nicole Karpinsky, who assisted with literature reviews and data collection.

References

- Glaser, A. (2016). 11 Police robots patrolling around the world. Wired Magazine.

- O’Toole, M.D., Bouazza-Marouf, K., Kerr, D., Gooroochurn, M., & Vloeberghs, M. (2009). A methodology for design and appraisal of surgical robotic systems. Robotica, 28(2), 297–310.

- Joh, E. E. (2016). Policing police robots. 64 UCLA L. Rev. Discourse, 516.

- Simon, M. (2017). The tricky ethics of Knightscope’s crime fighting robots. Wired Magazine. Retrieved from https://www.wired.com/story/the-tricky-ethics-of-knightscopes-crime-fighting-robots/

- Kyodo News (2018). Robot station security guard unveiled ahead of 2020 Tokyo Olympics. Retrieved from https://english.kyodonews.net/news/2018/10/2d643dba2a01-robot-station-security-guard-unveiled-to-press-ahead-of-tokyo-olympics.html

- Muoio, D. (2016). China’s new security robot will shock you when it feels threatened. Business Insider. Retrieved from http://www.businessinsider.com/chinas-anbot-riot-robot-2016-4

- Greshko, M. (2018). Meet Sophia, the robot that looks almost human. National Geographic. Retrieved from https://www.nationalgeographic.com/photography/proof/2018/05/sophia-robot-artificial-intelligence-science/

- Kiesler, S., & Goetz, J. (2002). Mental models of robotic assistants. CHI 02 Extended Abstracts on Human Factors in Computing Systems – CHI 02. doi:10.1145/506443.506491

- Lee, J. D., & See, K. A. (2004). Trust in automation: Designing for appropriate reliance. Human Factors, 46(1), 50-80.

- North Atlantic Treaty Organization (2006). The human effects of non-lethal technologies. (RTO Technical Report No. TRHFM-073). Neuilly-Sur-Seine CEDEX, France: North Atlantic Treaty Organisation.

- Rau, P.L., Li, Y., & Li, D. (2009). Effects of communication style and culture on ability to accept recommendations from robots. Computers in Human Behavior, 25, 587-595.

- Li, D., Rau, P.L.P., & Li, Y. (2010). A cross-cultural study: Effect of robot appearance and task. International Journal of Social Robotics, 2(2), 175-186.

- Huang, H. Y., & Bashir, M. (2017). Users’ trust in automation: A cultural perspective. Proceedings of the International Conference on Applied Human Factors and Ergonomics (pp. 282-289). Springer.

- TheCultureFactor 2018 Conference – Hofstede Insights. (2018). Retrieved from https://www.hofstede-insights.com

- Shallice, T. & Burgess, P.W. (1991). Deficits in strategy application following frontal lobe damage in man. Brain, 114, 727–741.

- Penny, H., Walker, J., & Gudjonsson, G.H. (2011). Development and preliminary validation of the Penny Beliefs Scale – Weapons. Personality and Individual Differences, 51, 102-106.

- Rotter, J. (1967). A new scale for the measurement of interpersonal trust. Journal of Personality, 35(4), 651-665.

- Schaefer, K. E. (2016). Measuring trust in human robot interactions: Development of the “Trust Perception Scale-HRI”. Robust Intelligence and Trust in Autonomous Systems (pp. 191-218). Springer US.